A commenter recently linked to a post by Steve Goddard claiming that “GISS Shows No Warming Over The Last Decade.”

Goddard shows this graph:

and thinks that establishes his claim. So I asked the reader,

Suppose I characterize the global temperature trend by this graph:Is that a valid characterization of what to expect in the future? Why or why not?

The graph I showed (also from “WoodForTrees.org“) shows only a little more than 1 year’s data. Let’s get one thing out of the way: if you think that is a valid characterization of the trend, you’re wrong. If you insist on clinging to that belief, then reason cannot reach you.

Obviously a single year (and a few extra months) is too short a time span to separate the trend from the noise. After all, the noise is pretty sizeable. For monthly data, it’s typically about 0.135 deg.C, and can easily be as large at 0.4 deg.C above or below the existing trend. The trend is presently 0.017 deg.C/yr. You can’t expect to see a trend of 0.017 deg.C in a single year, when the noise is as much as 0.4 deg.C above and below that trend. So a single year isn’t enough — if you don’t admit that, then leave now because we’re not interested in arguing with fools.

But Goddard’s graph isn’t limited to a single year, it shows a bit less than 10 years’ data. Is that enough? Over 10 years, a trend of 0.017 deg.C will accumulate a net change of 0.17 deg.C. One can easily imagine that this could be swamped by noise which can extend as much as 0.4 deg.C above and below the trend. But it might at least be possible that 10 years’ data would have enough information to tease the trend out from the noise — after all, statistics is a powerful tool for finding real trends in spite of sizable noise. Is there a way to estimate the limits of what the actual trend might be, even for noisy data?

Yes, there is.

When you estimate the trend using linear regression on actual data, not only can you compute the trend estimate, you can also compute the uncertainty in that estimate. However, one must be careful because the default, “naive” uncertainty estimate assumes that the noise is white noise. White noise is noise for which different values aren’t correlated. We usually think of the different white noise values as being “independent” of each other (although it is possible for noise values to be uncorrelated, and therefore white, even when they’re not independent).

However, the noise in global temperature data isn’t white noise. Different values are correlated with each other, a phenomenon called autocorrelation. But we can overcome the difficulty induced by autocorrelation of the noise. It can be a bit tricky with global temperature because the noise isn’t even the simplest kind of autocorrelated noise. One often treats autocorrelated noise as “AR(1) noise“, but as it turns out even that model isn’t sufficient. A reasonable approximation is to treat the noise as being what’s called “ARMA(1,1) noise“. I won’t give all the details (although I’ve done so before), I’ll just apply this noise model to estimate the uncertainty in the trend.

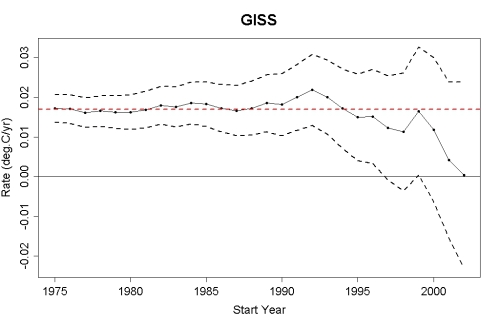

Let’s consider the trend up to the present (through June 2011) from all possible starting years 1975 through 2002. I’ll use GISS monthly temperature data, and I’ll treat the noise as ARMA(1,1) noise when estimating the uncertainty in the trend. That will enable me to compute what’s called a “95% confidence interval,” which is the range in which we expect the true trend to be, with about 95% probability.

For each year, I’ll plot the estimated trend to the present as dots-and-lines, and the upper and lower limits of the 95% confidence interval as dashed lines. I’ll also plot the estimated trend since 1975, which is 0.017 deg.C/yr, as a horizontal dashed line in red. If at any time the upper limit of the 95% confidence inteval (upper dashed black line) dips below the level 0.017 (horizontal dashed red line), then we have evidence (not proof, but at least evidence) that the trend is no longer upward at the same rate. Here you are:

Does the upper dashed line dip below the horizontal dashed red line? No.

The fact is this: there’s no evidence that the rate of global warming (the trend, not the noise) for the last decade is any different than it has been since 1975.

But Steve Goddard thinks there is. It seems that he thinks so because when he fits a trend line to less than 10 years’ data, the line is horizontal. He utterly fails to estimate the uncertainty in that trend. Frankly, I doubt he really knows how. And he either ignores, or outright exploits, the existence of noise to give a false impression of the trend.

I’ve done posts like this before, many times. The reason is that this is one of the most common techniques used by fake skeptics to give a false impression — exploit noise to give a false impression of the trend. It’s not just one of their favorite techniques, it’s one of their most successful because most people don’t have the statistical savvy to see through it. You could even write a book about it. It seems that they do this whenever any global warming indicator dips because of noise, but when a global warming indicator peaks because of noise, the silence is deafening. Cold winter in England? Proof! Hottest ever April in England? Silence.

Last but not least, I’ll tell you why Goddard chose to start with 2002. You might think it’s because it has been almost 10 years since then, and 10 years is a nice “round number.” The truth is — whether Goddard will admit it or not, even to himself — that he did so because he’s cherry-picking. He’s deliberately starting with a year which had extra-high temperature due to noise rather than trend, so he could paint a false picture of the trend by exploiting that noise.

I’ll bet Steve Goddard thinks he’s a skeptic. I think he’s a fake skeptic.

“I’ve done posts like this before, many times.”

Yes, you have, and thank you for your continued patience with those of us that don’t have the statistical savvy to work it out for ourselves.

The “take home” for anyone who wanders in here from Goddard Land, Watts Land, Milloy Land, Morano Land, (or any other of homes of the noisy cherry pickers) is most succinctly shown in your last graph. No statistics, calculus or even algebra is needed to see the sham involved.

Perfectly put.

I think it would be nice if Wood for Trees added this tool to their (excellent) site.

It bothers me that climate researchers are bearing the burden of providing basic education.

Why Richard? It is posts like this one, that is more explanitory, then the actual maths, that someone like I can understand and therefore helps me to see the duplicity in some of the claims out there.

In my opinion, I would rather learn these “basics” of statistical education from a real stats guy then someone pretending to be a stats guy. After all, in the end it will be me and others like me that will ultimately decide whether we as a society do something about this or not!

I think Richard’s point is that basic eduction that people receive from their schooling should *already* allow them to see through these shams.

It wasn’t that long ago 1998 was the cherry to be picked.

Now it’s 2002.

Has the price of cherries gone up?

They are being forced to push the “cycle peak” back because it isn’t happening. Back in 2007/2008 they were pushing cooling due to the solar minimum and PDO switch. They thought the 2008 La Nina was the beginning of a big drop. Didn’t happen.

They are going to face a problem in the coming decade if the world continues warming, which I think it will, because they’ve hung their hat on the “natural cycles” causing cooling.

It will be interesting to see how they handle it. My guess is they will go quiet about their past predictions, including throwing a number of folks who publicly predicted cooling under the bus never to be heard of again. Then they’ll set about coming up with an excuse for why the warming is natural and try to paint a picture that skeptics expected natural warming to continue all along.

Another thing to point out is that even someone who doesn’t understand the statistics could extend Goddard’s investigation backwards in 10 year chunks …

1992-2002 very rapid warming (around 0.5C!)

1982-1992 much flatter

and hypothesize a cycle (incorrectly, but those guys love cycles). And the prediction of that cycle for the next decade would be … ouch.

A more serious prediction for the next decade would be the long term trend (0.17C) plus a small amount for starting from a La Nina Boreal winter plus/minus the noise you’ve characterized above, plus/minus an unknown effect of how fast Chinese CO2 emissions will increase relative to sulfate aerosols. E.g. will they build new coal plants faster than they install scrubbers. I can’t answer that one, but eventually sulfate aerosol emissions will decrease, and when that happens the temperature trend will increase.

GFW

A number of the existing Chinese coal plants may already have scrubbers but they are not being used because of the cost of operation and the government is letting them get away with it because plant managers are in good standing with the Party.

But there is growing pressure from the Chinese public to do something about the terrible air quality.

Now if it’s true that these scrubbers do exist, and depending how many plants have them, and if it’s only a matter of flipping some switches to activate them, and once the aerosols settle out….

That temperature trend increase may be closer than anyone thinks possible.

I just got back from Chongqing, where my wife’s family lives. I’ve been going there for 10 years and the air was noticeably cleaner this year. My wife’s family said that, indeed, the government has been actively shutting down older dirtier coal-fired power plants and it’s making a big difference.

Just so I’m clear on this: is it now accepted (settled as it were) that coal fired power plants in China have slowed or negated global warming?

[Response: In my opinion, it is not confirmed. In fact I don’t see that it’s even necessary. Have a look at this. I’m not saying it’s wrong — just that I’m not convinced.]

Has the deep ocean heat sink hypothesis been disproved, discarded or simply awaiting further exploration/discovery?

[Response: I think it’s very likely that the deep ocean is absorbing significant quantities of heat.]

Thank you for your time and efforts.

This is very much like my discussion with Deech56 on the other thread. As 2011 has unfolded, it’s trending, meaninglessly but with irony, up.

This is 2002 to 2011. 9 years.

And this is what WfT shows for 2011 thru May. According to NOAA, June will be of little help to skeptics.

I’m a math illiterate. WfT should probably block me. I have no idea why it does what it does. The two trend lines are both up. Add Jan thru May to the 9 years, and it’s roughly flat. What is up with that?

The reason one upward trending series plus another upward trending series = one flat series is that there is a large drop from dec 2010 to jan 2011. This drop is not included in either individual series. So you really have this:

1 rising series + 1 big quick drop + 1 rising series = 1 flat series

JCH, using the “view the raw data” option suggests that the “2002–2011” graph actually uses data from June 2002 to May 2011. I’ve no idea how to get it to plot data from exactly January 2002 to exactly December 2010…

I guess the moral is, when in doubt, look at the raw figures?

— frank

Frank,

You mean this

this 2002-2011?

This is a 12-sample running mean so the first possible result occurs 6 months after the start of the period and the last occurs 6 months before the end (in May 2010, of course, not 2011).

Jan 2002 to Dec 2010 (inclusive)? try this.

But you want a 12-month running mean? You have to start 6 months earlier and finish 6 months later.

frank, you should not give me clues. I actually got a graph with 108 months of raw data, all 2002 thru 2010, by starting on 2001.5 and ending on 2011.42.

If I try 2011.4, it drops the last month.

As for 2011, 2011 to 2011.42 has 6 months of 2011 data.

And 9 + years (114 months,) is this.

JCH,

We got the same result using a slightly different To (time). :)

It’s worth pointing out that at WfT the From (time) is included but the To (time) is not, i.e., the range stops at the point immediately before that. This always applies.

I can certainly say that Girma should be blocked.

RN – you can’t block engineers. As a consequence, bridges could fall into rivers.

Actually, in Girma’s case, I think one can argue that *failing* to block him could lead to bridges falling into rivers.. Non-existent bridges and rivers, but since he lives in his own fantasy world, they’d be real enough to him.

Should probably block you? You are only doing what anyone else can do, but the great thing is that we can all see what you’re doing.

WfT linear trends are just standard OLS trends. Put the data into a spreadsheet or math package and you’ll get the same result. (For anyone who doesn’t know, click on ‘raw data’ under the chart. Or get it from the source if you have any doubts.)

Are you serious about not knowing? ;)

Why on earth wouldn’t she or he be serious? This is specialized knowledge. It’s not like knowing what year this is or to waltz. Wait, that’s specialized too. But anyway, you tossed out an acronym (OLS). It turns out have something to do with lines and squares. Squares? How did squares get into the picture? Well in this case “square” means the square of a number, not a four sided figure. What then is a “least square?” Zero?

… It goes back to the idea of the distance from a line to a point not on the line (even if the line is extended). This distance is defined as the length of a line segment from the point to the line, perpendicular to the line (extended if necessary). You proved in geometry class that there always is such a perpendicular. How do squares and square roots get into it? Recall the Pythagorean theorem ….

But in this statistical business you don’t have a line. You start with a bunch of points. You seek a line such that the sum of all the distances from the points to the line (all taken as positive regardless of which side of the line a point may lie on) is a minimum. It turns out that

a) There is such a line, and

b) It is not too hard to find it, except that it would take a whole pack of envelopes or cocktail napkins if you start with very many points.

c) After you actually do a couple examples by hand with a small number of points it becomes clear enough.

Not sure I understand how your second paragraph applies to the concept of least squares regression. As I recall, it is the sum of the squared vertical distance between the line and the points that is minimized.

I thought that JCH might be playing with us. ;) My stats knowledge is very basic so I assume anyone else commenting here knows more than I do.

Why “least squares”? My simple understanding is that if we have (or imagine) a line joining the data points and want a straight line to show the trend, we need to minimise the total of all the areas enclosed by the 2 lines. There’s no need to take all the distances as positive because a square of a negative number is positive anyway. “Ordinary” just means linear (straight-line) as opposed to non-linear (curved) trends.

Oooops! Torsten is right (he said with a red face). ;^(

Pete,

I hope you realised that my reply was to you, not Torsten. :)

One of the problems of WordPress seems to be that replies can be nested only so far, so they can get detached quite quickly. No comment numbers either, which is what I use at Deltoid to make it clear who I’m replying to.

JCH: Your yearly graphs display 12-month rolling averages of anomalies. Your monthly graph displays monthly anomalies without averaging. The anomaly fell 0.29 degrees from mid-November 2010 to mid-December 2010, and anomalies since then have been below 2010’s average anomaly, though they’re somewhat increasing, as the monthly trend line shows.

Meow:

Oh OK… I think that does explain what JCH was seeing,

— frank

frank and Meow and TS – I’ve been working with the raw data to better understand how the graphing features work. Thanks for the tips.

Could someone either explain the following link or point me to where it is explained? The topic, like this post, involves proper time horizons to evaluate Global Warming but gets a different answer then what I usually hear. Much appreciated.

http://bartonpaullevenson.com/30Years.html

What do you usually hear?

(BPL is not someone who makes stuff up.)

I usually hear 12-20 years; The length of time it takes to establish a statistically significant trend with an alpha of .05. The length of needed time is dependent on the SNR so one can’t give an exact point in the future when starting now that one would need to wait to till one could calculate a significant trend since we don’t know beforehand how much variance will be in the data.

The other one I hear is 30(ish) years. The justification I hear for this one is that it is long enough so that various quasi-oscillatory phenomenon (ENSO, 11 Solar, etc.) can be corrected for. So you do a Fourier, attenuate the wavelengths associated with known oscillatory phenomenon, then convert back to the standard domain. to do this requires a time length of around thirty years to be able to correct for the most common cycles that strongly influence short (less then a century) time lengths.

I personally prefer the “30 years” definition, since it’s based on prior knowledge of climatic cycles, while the “12–20 years” one has an ex post facto feel to it. But that’s just me.

— frank

30 years is probably from WMO’s definition of climate – the average of 30 years of weather. And, yes, their defintion really is that simple.

Starwacher, here’s a good place to start, aimed at high school students:

http://moregrumbinescience.blogspot.com/2009/01/results-on-deciding-trends.html (see the related threads and files available on his site for more)

What might be entertaining one day is to randomly select a population of climate skeptic graphs, and look for any correlation between starting-point and temperature.

Well written, Tamino.

I am _so_ tired of this tactic. I really wish tools like WoodForTrees included AR(1) and AR(1,1) models so that more people could evaluate the issues involved.

Please don’t make me go to Goddard’s blog any more. It hurts. It is not a good place to go if you value your sanity. I used to go there a lot, but I’m recovering. I did not need to see that the same crowd of maroons is still there, still picking the same cherries, still issuing the same dullwitted insults to anyone who dares to mention any actual science.

Looks like another vote for “global warming stopped in 2002.”

I’ll have to update my “When Did Global Warming Stop” article. BTW, other sightings of ‘warming cessation’ claims you may come across will be gratefully accepted, if you’d like them documented. Just email me a link.

You’re very silly. Global warming stopped in 2100. Can’t you see the 2100-2108 decline?

Ah, yes. Duly noted. . .

This meme will never die at the current rate of growth of CO2 forcing which means there will continue to be a plentiful supply of 10 year periods over which the noise is stronger than the signal. So get used to it folks.

Ah but don’t underestimate the power of being able to reply “that’s what you claimed 10 years ago”

Yep, that’s the point of “When Did Global Warming Stop?” The plethora of dates starts to look a little silly after a while.

Thank you for taking the time to counter the rubbish that is presented on other sites. From some of your comments, it obviously seems like a thankless task , but there are many like me who listen quietly to and agree with what you say.

On the issue of confidence limits, it might be an interesting illustration to see how the limits decrease with an increasing sample. So showing a rolling 10 year sample trend with 10 year confidence limits against the graph you show above. This might illustrate that the current situation is not an exception and that the ‘pause’ is illusionary.

Apologies, I’ve been expanding to frequently cross-link to this blog at one particular place, past articles included and this thread too. Don’t be surprised that some staunch ”ain’t true” positioned folk will come here to interject. 99.9% seem though to be staying in their comfi-zone and not venture out to find that (sigh) Inconvenient Truth. Keep debunking Tamino.

Ray Ladbury’s comment about this ”world governing body” and IPCC perception, State side, is very right on the nail. The US regular population is in origins refugee of [feudalistic] government and suppression, so deep-seated it is no doubt. 5% of world population using 30% of resources… that’s what the other 95% is observing and every attempt to change BAU fervently objected. Amazing this to be from the country where adversary can be turned in enormous opportunity easier than any other place in the world, and get to breath cleaner air and drink saver water on the go.

Tamino, was just wondering if you have seen Zeke’s recent article at Lucia’s? If you have, could you or another literate stats guy give a very simple explanation as to why the confidence bars start to widen at the end of his graph. I appreciate Zeke’s insights, but find that sometimes his explanations are above my understanding.

Leo,

The CIs in my graph represent an estimate of sampling error, not trend as we are discussing here. They go up a tad at the end because there are considerably less stations post-1992 in GHCN and my chosen method is sensitive to the number of available stations.

It’s been shown (I forget by who) [Response: Mojib Latif.] that a decade of no warming, and even cooling, is quite consistent with model predictions; the fakers distorted that to a prediction of upcoming cooling. By chance, the fakers got that decade in the 2000’s and life will never be so good for them again. They liked it so much they’re refusing to leave 2009 behind.

It was also shown by Easterling & Wehner. Fakers have performed analyses claiming climate models are not consistent with periods of non-warming. Why these analyses are stupid is shown here.

I’m wondering how the fake skeptics are going to try to spin it when warming snaps back into line with the long term trend.

This is going to be an interesting decade on many many levels.

Rob: given that 2011 is quite warm, even with a strong La Nina, I suspect you wont have to wait more than a couple of years to see how they try to spin it.

I’m hoping that the general public (and, more importantly, the media) wakes up to the fact that they’re full of it, and their ‘predictions’ are really not based on any understanding of how the physical world really works.

Bern, I’m sure you’re right, but I think later this decade temps are going to make a big jump in the positive direction. When these guys have been harping so long and so hard on an upcoming cooling trend, what are they going to possibly say when exactly the opposite happens?

Well, based upon my experiences, they’ll ignore it.

For instance, do you hear any of them saying word one just now about Arctic (or, for that matter, Antarctic) sea ice?

They’ll just change the subject to, oh, why back-radiation contravenes the Second Law, or something.

I should have added, though, that they do tend to look that much more ridiculous and that much less credible every time they do.

“..what are they going to possibly say when exactly the opposite happens?”

“I’m not Steven Goddard. Never heard of him.”

“I’m not Steven Goddard. Never heard of him.”

Oh, no. Goddard will never admit a mistake, Not under any circumstances. Ever.

There is a frequent commenter at Climate Etc. who is a scientist. I first started seeing his comments on blogs around 2007: on RC. He thinks we are in a cool phase, and will be for decades. The first comments I saw of his on RC stated a belief that global temperature would essentially move sideways from 1998. So I would imagine he looks at the graphs and sees confirmation of his beliefs and his prediction. On the other hand, Smith et al 2007 made a forecast about half the coming years setting global temperature records. However, they stated the first few years of the period, starting ~2005, would see natural variability suppress the AGW signal, and subsequent years would see the resumption of AGW causing record temps in half the years. With both 2009 and 2010 being hot years, in spite all the ballyhoo about the negative PDO and a strong La Nina and a napping sun, I would imagine they look at the graphs and see nothing that proves them wrong. They made a prediction about 2014 that I think will be important.

If the globe cools, there will be a lot of chest beating. If the Smith et al 2007 forecast proves essentially correct, this orb will be hot hot hot.

yeah the “skeptics” are in for a rough ride and I am eager to see how they play it

Is 2011 warm? The west coast of North America and middle certainly isn’t. Neither is western Europe. Where are you from?

We all know the answer: they will recycle old claims and invent some new ones. These will not include rising CO2 levels, of course, except to “prove” that they have zero or minimal influence on temperature.

One of the sad ironies of this situation is that any joy taken in seeing the “skeptics” proved wrong again and again is overwhelmed by the fact that things would be so much better if they were right! I get the strong impression that some of them just see this as a game that must be “won”. I wonder at what time in the future they will have no influence, and, for example, what percentage of the sea ice will have been lost by then.

“What percentage of the sea ice will have been lost by then. . .”

Sadly, I’m betting 100% (summer, not winter.) I don’t think anything else is likely to crystallize opinion into a more realistic vein first, and I think that ice-free summer’s going to happen sooner than many (non-skeptics!) think.

But that’s FWIW–I have no particular claim to relevant expertise, and have been wrong SO many times before!

Bobby: I suspect the answers to your questions are “When there isn’t a buck to be made out of it any more”, and “Very, very little”.

Many of the most effective “sceptics” suffer from “Emeritus Syndrome”. Their influence will, sadly, not disappear until they are no longer with us.

From Wikipedia: Decadal Anomalies – better known as a 10-year boxcar smoothing (not moving boxcar) – http://en.wikipedia.org/wiki/Instrumental_temperature_record

Years Temp. anomaly

(°C anomaly (°F anomaly) from 1951–1980 mean)

1880–1889 −0.274 °C (−0.493 °F)

1890–1899 −0.254 °C (−0.457 °F)

1900–1909 −0.259 °C (−0.466 °F)

1910–1919 −0.276 °C (−0.497 °F)

1920–1929 −0.175 °C (−0.315 °F)

1930–1939 −0.043 °C (−0.0774 °F)

1940–1949 0.035 °C (0.0630 °F)

1950–1959 −0.02 °C (−0.0360 °F)

1960–1969 −0.014 °C (−0.0252 °F)

1970–1979 −0.001 °C (−0.00180 °F)

1980–1989 0.176 °C (0.317 °F)

1990–1999 0.313 °C (0.563 °F)

2000–2009 0.513 °C (0.923 °F)

The next decade show early signs of going even higher.

Thanks for drawing that to my attention, Owen.

Here’s a graph of that (each average point is plotted at the beginning of its decade). Anyone know why the averages are very close to, but not exactly the same as, the ones that Owen gave us from NASA? Is it the difference between GISS and GHCN?

TS, a lot of them appear to essentially agree with GISS. How up to date is wikipedia? Maybe they have not picked up a 1934-level correction!

JCH,

The Wikipedia article links to the data. I must confess that I haven’t looked at that and compared it with the GISS data at WFT (which is updated daily).

Here are the 2 sets of averages with Wikipedia on the left and WFT GISTEMP (rounded to 3 dp) on the right (all °C):-

1880–1889 −0.274 -0.274

1890–1899 −0.254 -0.256

1900–1909 −0.259 -0.261

1910–1919 −0.276 -0.276

1920–1929 −0.175 -0.176

1930–1939 −0.043 -0.042

1940–1949 0.035 0.035

1950–1959 −0.020 -0.020

1960–1969 −0.014 -0.014

1970–1979 −0.001 -0.001

1980–1989 0.176 0.175

1990–1999 0.313 0.313

2000–2009 0.513 0.516

This can’t be a baseline shift as all the numbers would be shifted up or down by the same amount. The differences are minute but I’m still curious.

Too close to be different datasets, then. Likely the NCDC label on the Wikipedia set was erroneous, and the Wikipedia data is really GISTEMP. If I had a moment just now, I’d check. . .

Had *just* a moment; from NCDC yearly means, I calculated the 00s at .577–oops, that was ’01-10, not ’00-09. Back to the drawing board. . .

OK–

Here’s the NCDC numbers as I calculated them (well, Excel and I.)

1890-99 -.228 (All anomalies in degrees C, all values to 3 places.)

1900-09 -.293

1910-19 -.288

1920-29 -.164

1930-39 -.021

1940-49 .059

1950-59 -.018

1960-69 .032

1970-79 .045

1980-89 .216

1990-99 .381

2000-09 .554

Contrary to what I thought I knew, the anomalies are *higher* than GISTEMP, which I presume is a baseline thing.

Kevin,

The Wikipedia article that Owen quoted links to “NASA data”, which is titled GHCN, not NCDC.

I’ve checked WFT’s averages and I get exactly the same results using the same GISTEMP monthly data. I get exactly the same results again using the monthly figures in the GHCN table. I wondered if, perhaps, the Wikipedia article instead used the averages of the yearly (J-D) averages but these didn’t exactly match the figures in the article either. The only conclusion I can come to is that when the Wikipedia averages were calculated, the GHCN figures were slightly different to the current ones, i.e., they have since been adjusted or corrected.

I have search high and low for another source than wikipedia for those numbers – with no luck. Looks to me they have to be calculated. Isn’t it possible somebody just added them up wrong?

JCH,

Yes, I think they were calculated by the author of that part of the Wikipedia article, as the link points to the GHCN monthly data, not to any source of the averages themselves. Given how small the discrepancies are, it’s hard to imagine what error could have been made in the calculations, which is why I’m wondering about small changes in the data.

And now Pat Michaels has an article in Forbes claiming that there is no ‘statistically significant warming in the last 15 years’. I guess it must be cherry season.

Someone should ask Michaels how often 15 years of lower tropospheric temperature data *ever* results in trends with a statistical significance at the 95% level, even when we know that there is statistical significance with larger sample sizes. I guess we are going to see the same 15 year time frame from “skeptics” for some time to come; last year it started in 1995, this year 1996, next 1997… eventually it will have to be 14 years, or 13, as warming accelerates. At this point, deception seems to be their best hand to play.

I read the article in Forbes. It starts off:

Wow. He even cherry picks a specific month in 1996, so that his starting point has the highest anomaly possible. When are people going to stop listening to these deceitful fake skeptics?

Wow, It looks like the comments are by comment-bots explicitly designed to make Pat Michaels look sane…if that’s true then all I can say is well-played Dr. Michaels!

Ah, yes, Forbes, that prestigeous climate science journal…Oh, wait.

Shouldn’t that fricking tell them something?

Tamino, as part of the update of my “When Did Global Warming Stop?” article, I’ve linked this post. Let me know if you’d prefer me to pull the link for some reason.

Most regulars here have already read WDGWS–and candidly it’s not worth visiting just for the update–but for those who’ve missed the article altogether, and who may be interested, the link is:

http://hubpages.com/hub/When-Did-Global-Warming-Stop

I wrote a Mathematica demonstration that allows you to investigate trends within random walks and within the GISS dataset. The demonstration requires the free Wolfram CDF player. The demonstration is at

http://demonstrations.wolfram.com/ShortTermTemperatureTrendsWithinLongTermWarming/

There is something else that looks wrong with the graphs. There seems to be more points above the 0.0 than below on the y axis. Shouldn’t it be equal?

Sylvain,

Which graphs?

uh. Aren’t there graphs in the article posted above?

@Sylvain:

why would you expect, for any of those graphs, that there should be as many points below the axis as above?

The first graph shows temp anomaly relative to an earlier cooler period. It’s warmer now, so all the data should be positive, as it is.

Second graph, same answer.

The third graph shows rate of warming, for a period (since ~ 1975) in which the rate has been constantly positive. The positive rate is reflected in the positive values for rate – therefore, above zero.

Fourth graph, same answer as first and second graph.

Why on earth would you expect, for any of those graphs, that half the plotted points “should” be below zero?

Sylvain,

Yes but there are 4 there and they don’t all show the same thing, do they? Only 2 even show 0.0 on the y-axis. Do you understand what the y-axis actually represents in each case?

It looks like Lee has the answers anyway.

Thanks Lee. That sort of explains what is on the graph but it also just makes it another problem then.

So you have said that there is a benchmark from an earlier time. I use benchmarks all the time. The most common mistake for benchmarks in systems analysis is representing only the average behaviour. The benchmark is supposed to be representative of the real data(which is the data being shown). Why was this previous average used to represent the data? and how does it similar to the real data? How was it validated? Averages ignore the variance. Constant values as benchmarks are undesirable since they can cause synchronizations that lead to faulty conclusions.

[Response: You are mistaken.

The baseline is not a benchmark. It’s just an arbitrary zero point. The point is to determine how temperature is changing. For that purpose, any baseline will do. You could even use absolute zero and express temperature in Kelvins, and the changes would be the same. In fact the graph would be identical except for the zero point, and the numbers on the left-hand axis.

The reason they don’t use an absolute benchmark is that we can determine the changes with greater precision than we can determine the absolute average temperature. Regardless which baseline you choose, we’ve still seen about 0.9 deg.C warming since 1900 and about 0.6 deg.C warming since 1975. That’s what global warming is about — not about some arbitrary baseline. In fact every one of the major temperature records uses a different baseline, most were chosen because they were convenient *at the time they were defined*, and they haven’t been changed because it don’t mean a thing. They all tell the same story of warming.

The whole “benchmark” objection is a total red herring — it’s meaningless. Until you internalize that, you’re working from a fundamentally mistaken perspective.]

Sylvain:

What the heck is Sylvain talking about? It’s like he’s just throwing out fancy words which don’t actually mean anything — an instance of ‘if you can’t dazzle them with brilliance, baffle them with bullshit’.

This is grade school level stuff. The idea that subtracting a constant value from a series of numbers will change the trend from down to up — that’s just a laughably stupid idea. Work out the darn algebra for yourselves, folks.

— frank

I explained how they teach benchmarking and data presentation in computer systems analysis, I suppose climate science must be different. Support for benchmarking methodology I used is from any text book on Computer Systems Performance Analysis that you find in nearly any University Bookstore. For an obvious example, if you had a sinusoidal graph you wouldn’t put the zero baseline anywhere – it actually does matter, you put zero in the middle of the y axis. The rule of thumb is if have a time series you show the data and the zero point should not detract from the graph i.e. you want the data to stand out rather than the baseline.

The data has to have context.

[Response: You don’t get it. It’s not a “benchmark.” Your calling it one, doesn’t make it so.

This is pretty basic. Perhaps you should invest some effort into acquiring context for your own understanding.]

Sylvain,

So, it does appear that you didn’t understand the meaning of the numbers on the y-axes, and this explains your question about points above and below zero. It is strange that anomalies seem so hard for some to understand, but perhaps you now realise that it’s a good idea to ask questions instead of making unfounded accusations?

Sylvain:

Let me guess, dude … you’re unaware that our host is a professional statistician (as in PhD, as in published in the literature) who specializes in time series analysis.

And that your “teaching” him just sorta makes the rest of us laugh at you.

Sylvian,

Lee did not use the term “benchmark”. You did.

Another conceptual difference between climate and computers is that feedbacks in climate are eventually self stabilizing – they reestablish a balance at a new level rather than entering “endless loops” that “runaway” like software does.

Hope that helps,

arch

Sylvain, you can spare me the jargon-filled technobabble about university computer science, because it’s clear you don’t even understand high school math.

Subtracting a constant value from a series of numbers won’t alter whether the series trends up or down. Again, folks, work out the darn arithmetic and algebra to see for yourselves!

— frank

Sylvain,

No, you didn’t explain how benchmarking is taught.

But first you have to explain how it is relevant to temperature anomalies anyway. You appear to have no inkling, even now, what the graphs mean.

“Place little faith in an average or a graph or a trend when those important figures are missing. Otherwise you are as blind as a man choosing a camp site from a report of mean temperature alone.”

Darrell Huff

[Response: It’s a pity that you can’t let go of your misconception. Perhaps some day you’ll realize how foolish you are.]

Sylvain, Huff’s quote is a good one but it assumes some basic knowledge about what is important.

If you’re hung up about a lack of 0.0 on the Y axis, then talk to Goddard about it since his graph doesn’t show it (but it’s not the source of his deception). Perhaps he can help you.

Think in DC, not AC (not sinusoidal).

Sylvain would have us believe that the mean of 2,3,2,1,2,3,2,1,2 is… 0

Because, see, one has to benchmark against the data, and place the origin of one’s coordinate system at that benchmark, so zero is at 2.

Owen’s numbers are from NCDC; like GISTEMP, the NCDC anomalies are largely derived from GHCN data (“Global Historical Climate Network”, or some close variant thereof.) But the methodologies differ, beginning (IIRC) with the grid scheme chosen, so there will be those differences. One obvious one is baseline; GISTEMP includes the earliest, coldest years as part of its baseline period, so its anomalies are higher–or so I seem to recall at least.

Great analysis as usual, Tamino. I saw a similarly themed post on WUWT by Roger Pielke Sr. that claimed that the “lull in global warming” from 1998 to 2008 “falsifies” the idea that CO2 and other greenhouse gases dominate climate change. Since it seems that Pielke Sr. knows the difference between natural variability and climate change, I’m not sure if he is just obtuse or deliberately trying to mislead (or both).

I have a feeling that the fake skeptics just tune out whenever they hear natural variability/statistical significance arguments, perhaps because they have some sort of reason filter or because it requires college freshman-level statistics. From what I read on that link above, Steve Goddard just interprets any sort of argument like the one Tamino carefully laid out as desperation from those who support the AGW argument. I think that their thinking is simple: the global temperature plot between two cherry-picked dates looks flat, global warming projections show a steady temperature increase (based on model *ensemble mean* projections), and so the IPCC global warming hypothesis has been falsified. I get the impression that the fake skeptics don’t realize that the *individual* climate model realizations exhibit natural variability not too unlike that of the real world.

That’s why I wonder if it would be instructive for some to pull out the global temperature time series from individual runs of the IPCC models, and cherry-pick some 10-year periods between, say, 1990 to 2020 that have a similar “global warming lull” due to natural variability. If the same models that project a substantial increase in global temperatures due to greenhouse gases also show brief periods not unlike the last decade, then you would think that people would be more hesitant to claim that AGW has been falsified, right?

Nice try. Except models are anathema to the fake skeptics. Play again?

Never underestimate the power of ideology to overcome any amount of logic or reasoning.

Nat J

Another, simpler, approach is to construct an artificial temperature series, with the same overall trend and with the same noise characteristics as, say, GISS from 1975. We can make our artificial series as long as we like – say 100 years – and examine it to see IF it contains any periods of say 10 years with declining temperatures. If we find such a “cooling” interval in a temperature series that we KNOW has an overall warming trend then we KNOW that that interval is a product of the noise and cannot represent a change in trend. That then tells us that similar cooling intervals within the real temperature series are to be expected as a result of real noise and are not necessarily evidence of a change of trend.

Of course, this wasn’t my idea: Tamino has done this already, see “Don’t Get Fooled Again”, September 12th 2008, here:

http://web.archive.org/web/20081029173848/https://tamino.wordpress.com/2008/09/12/dont-get-fooled-again/

Hello Slioch,

Yes, what you describe and what Tamino did is a good test and is convincing to someone like you (I assume) or I. However, I specifically mentioned the part about analyzing IPCC model simulations because I’m sick and tired of hearing that this so-called lull in global warming is completely beyond the realm of our state-of-the-art climate model simulations, and so climate scientists are now scrambling to explain away this anomalous recent period. That seems to be crux of Pielke Sr.’s latest comment. I thought that if these same people like Goddard or Pielke saw that these same climate models also show similar global warming “lulls” at times, then maybe they wouldn’t be so quick to think that global warming has been falsified. Then again, I’m probably giving them too much credit, so my idea probably would be a waste of time. Also, the post by Tamino that you linked should be convincing enough, and it doesn’t seem like those concepts have sunk in with most of the fake skeptics.

We don’t even need an artificial series, we can use the actual data beginning in 1941 and observe that the 10 year cooling trend was -0.26 deg C during a period when there was very little change in CO2 (it actually decreased in the latter part of World War 2). When I point out to denialists that this proves that non-CO2 causes can overwhelm the effect of CO2 over a 10 year period, they go deliberately dense.

Here’s an example, complete with animation, which should be obvious even to a computer programmer:

“It Hasn’t Warmed Since 1998”

(http://capitalclimate.blogspot.com/2009/04/it-hasnt-warmed-since-1998.html)

Sylvain | July 19, 2011 at 3:35 am |

Is 2011 warm? The west coast of North America and middle certainly isn’t. Neither is western Europe. Where are you from?

Everywhere east of the Rockies is currently experiencing a heat wave, look at the Weather Channel national map:

http://www.weather.com/newscenter/nationalforecast/index.html

23 states are at under a heat advisory!

“Except for the Northwest and Maine, the nation bakes midweek and any thunderstorms will be few and far between.”

Where are you from?

Oh, you meant the trend of the last few days. I thought you meant all of 2011. I’ve been in Seattle mostly, and have visited Vancouver and Calgary this year and have relatives in Sweden. Everyone in those places says its been cold so far. In the prairies(maybe the north only) the reports were delays due to cold wet weather in their crops and gardens.

BTW, check your own city’s weather trend out by going to

http://www.wolframalpha.com

then enter “weather” and your city, then you can select different time periods in the temperature graphs. The “all” category give the longest.

[Response: In addition to working under a “benchmark” misconception, you also seem to think that less than one year’s data is meaningful in terms of the trend in *climate*. Until you admit to yourself that you have attached yourself to notions that are meaningless or outright counterproductive, it’ll be very hard for you to grasp reality.]

I’m the one who referred to 2011 as warm. Versus expectations, I believe it is so far. January thru June is the 11th warmest year. The year-to-date numbers:

Jan – 17th warmest

Jan thru Feb – 16th warmest

Jan thru Mar – 14th warmest

Jan thru Apr – 14th warmest

Jan thru May – 12 warmest

Jan thru June – 11th warmest

None of that means much, but my hunch is the year will break into the top 10 and work it’s way up as long as ENSO neutral conditions are present.

Where I live, it’s been hot has heck since May. June, 2011 was the hottest Jun in the record book, and July has been almost solid 100F-plus days. And it no longer rains here.

Sweden is not in western Europe.

Last time I checked it was next to Norway in northern Europe.

No offence, but the data for 2011 so far from the CRU is 0.302, this is less than the last 10 years so far.

http://www.cru.uea.ac.uk/cru/data/temperature/hadcrut3gl.txt

[Response: You’ve done nothing but fixate on noise from time spans too short to be meaningful. It’s quite ridiculous (by which I mean, worthy of ridicule) to use a 5-month average to suggest that the trend is anything other than continuing. Evidently you’re not capable of doing better.

I wonder why you would think anyone here would fall for this. Maybe you couldn’t read the title of this post?]

Oh God, we’ve had a very strong La Niña and all of climate science is proven wrong!

Of course, Sylvain doesn’t realize that without climate science, he wouldn’t know of La Niña,

And unlike Sylvain, people quantify the effect.

Sylvain:

No offense taken, how could anyone be offended by the statistical stupidity that follows this snippet?

Seriously …

“Less that the last ten years so far. . .”

Not meaningfully, no–you can find lower 5-month stretches in that data (for instance, the end of 2007 into the beginning of 2008.) And as Tamino says, a five-month stretch is not particularly meaningful in the first place.

From a physical perspective, we know basically why this stretch was a bit cooler; we had a pretty strong La Nina, which is known to lower global means a bit. But we’re now back at ENSO-neutral conditions, and we may expect higher anomalies once again, with some confidence.

What’s been of interest to many is how the temps have stayed as high as they have, given the low solar activity of the last couple of years. (Of course, some denialist types were seizing on this ‘quiet sun’ to predict a new mini-Ice Age–about which they steadfastly refuse to become embarrassed.)

Ooh, Sylvain goes from spewing ‘systems analysis benchmarking synchronization’-style technobabble nonsense … to spewing simplistic nonsense.

— frank

Sylvain, Thanks for the weather report, but the subject was climate. Learn the difference.

If you look at years that started with a La Nina ending in the spring, and that had ENSO Neutral for the rest of that year, all but one trended up during the year. The models suggest resumption of La Nina is possible, but it looks like that would not happen until late in the year, if at all. Time will tell, but to me ENSO Neutral for the rest for the year appears most likely.

Beginning with La Nina in the spring, shifting to neutral for remainder:

1989

2001

1996

2008

So HadCRUT through 5 months is following the above pattern, and it is likely June will bend that trend up.

I do not think skeptics thought 2011 had a snowball’s chance of ending up a top-10 year. They thought it was going to be a very cold year: as in, 1990s cold: a reversal of warming. It appears unlikely to me it will be as cold as 2008 was, and that is not going to change the longterm trend for warming.

I’m from Earth.

Where are you from, Sylvain?

So, Goddard wants to play the cherry-picking game with 10-year time frames? OK, I am up for it.

Here you go: It is cooling!We are clearly heading for an ice age, with ice sheets all the way to equator.

Oh no, actually… hang on, we are in fact heading for a hothouse Earth! We will have crocodiles in the Arctic ocean within decades!

But then again, may be the temperature is actually flat? (and of course Goddard’s own cherry pick)…

Who needs statistical significance when one can just pick whatever suits one’s preconceived conclusions?

Here are all of my cherries in context (GISS, HadCrut).

The middle of North America isn’t warm? Temps over 100 and heat indices over 130 in Iowa. Sylvain, where do you get your information? I hope you didn’t pay for it.

Yes, yesterday it was reported that Oklahoma was in the worst drought ever, edging out ’33, for the moment at least–though the meteorologist interviewed said that another week without rain would pretty much lock-in 2011 as the worst ever. Rainfall–well, dearth of same–is the primary component of the drought, of course, but the sustained triple-digit (F, of course, this is the US) temperatures are a significant contributor to the bone-dry soil.

Meanwhile, the northern reaches of the ‘middle’ are suffering seriously, with Chicago struggling to get seniors to cooling centers and Minneapolis seeing dewpoints in the 80s–no lack of moisture there, apparently, but it’s not very favorable to the evaporative cooling systems that come standard on h. sap.

“Heatwave spreads across central and eastern US”

“Seattle shivers”

see:

http://www.bbc.co.uk/news/world-us-canada-14238358

Just to go from climate science to weather forecasting.. After el nino and la nina wasn’t 2011 expected to be cooler. If we want to call 11th warmest on record cooling then we have lost touch with realty. I sure don’t look at this as a good sign.

Just did a SORCE based annual TSI and the running 2011 shows a mean of 0.1043 Watts/M^2 less than their 2003 [TOA]. Now for the non-plussed: 2011 is so far 0.3706 Watts / M^2 higher than 2007.

11th warmest *so far*. By the time all 12 months are in, it could be in a different spot. And while it could conceivably be a lower spot…does any one know a particular reason to expect it to be? JCH’s “top 10 as long as ENSO doesn’t go negative” hunch makes sense to me.

Looking for years with La Nina in the spring, neutral in the summer, and resumption of La Nina in the fall, I see only one: 2000. 2000 is ~ 16th warmest year.

Sylvain sez:”For an obvious example, if you had a sinusoidal graph you wouldn’t put the zero baseline anywhere – it actually does matter, you put zero in the middle of the y axis. ”

The graph of sea ice is roughly sinusoidal. So the average amount of sea ice should be zero, eh?

You don’t ask a weatherman to learn which way the trend goes.

Nicely played, Hank–but you didn’t by any chance read my response to Ray’s comment on the “Green Fascism” article?

It wasn’t nearly as witty as your quip here, but I’d like to steal a little credit for the Dylan reference, if I reasonably can!

Yes, the Seattle temperatures have been low, last summer, this spring, this summer. I was expecting things to warm up a bit with the La Nina. Coastal waters are warm in the NW during an El Nino, but Central North Pacific is cool and the winds we get blow off of that I believe.

So with La Nina being the negative image of El Nino (by definition given the use of linear principal component analysis to define them) I thought things would warm up. But we have been having 60s and 70s, rarely getting into the 80s. Sunday will be in the low 80s, though.

Been a while…

I think the “skeptics” give seminars in their argument styles. There’s a consistent pattern in their argument styles. Attack, ignore defeat, attack again or change subject…

Jbar,

Add to the tactic, the [eventual] agreeing but, hand-waving the level of impact/change/effect…. “Yes, CO2 is a GHG, but the impact is just small” type. They don’t wanna understand concepts of the column of air reaching up many kilometers, boat dreaming that 0.0394% or 394 ppmv is small enough to let LW escape near unhindered even though it’s 40% over the 19th century level. And when snow disappears from 40-50 million km^2 on the NH spring summer period, it’s largely offset by Spencer’s sheep cloud albedo, of course a negative feedback. Bet you one thing, in those quarters the zombies would just walk by… not animated to scrape the void craniums.

Sylvain, the “How to Lie With Statistics” quote was talking about a graph with NO numbers on the y-axis. This is a very different matter what we have here. The y-axis of these temperature anomaly graphs mean something specific and well-defined.

So nice quote, but well out of context. Could be an entry in “How to Lie with Book Excerpts” if someone was feeling snarky.

I was paraphrasing http://mind.ofdan.ca/?p=1039#comment-71834 but I’m sure I read your comment earlier and was inspired to rethink that.

Reply to Chris O’Neill | July 24, 2011 at 3:27 am |

Yes, indeed, and I fancy information from real temperature series may have more traction with those inclined to doubt such information.

But I don’t think providing information in the period you suggest – after 1941 – is useful IF what you are wishing to do is to show that periods of many years with a cooling trend are to be expected even in times when there is otherwise a long-term warming trend, which is what I was concerned with. I prefer to use the period of warming after 1975, using graphs such as this from WfT:

http://www.woodfortrees.org/plot/gistemp/from:1975/plot/gistemp/from:1979.67/to:1987.58/trend/plot/gistemp/from:1990/to:1996.42/trend/plot/gistemp/from:2002/to:2009/trend

Slioch – I’m just a knucklehead at this stuff, but a few months ago I decided to make a graph that would show how longterm trends are effected by brief lulls in warming, so I made several variations of this graph using all the temperature series on WfT.

Adding the trend from 1920 to 1951 , the ever shorter trends to 1951 go severely negative. It appears to me the longterm trend to 2011 from that period still gets stronger.

It’s fun playing with WFT, isn’t it?

BTW you don’t need to specify a To time unless you want to limit it, i.e., it defaults to the latest month available so you can often skip that box.

I used the WfT “last” function to give the UAH trend for the last 20, 19, 18 etc years (e.g., http://www.woodfortrees.org/data/uah/last:xx/trend, where xx is an integer multiple of 12, from 36 – 3 years – to 240 – 20 years). They are ALL positive, and the average is close to the 20 year trend.

# years annual trend start date (June)

20 0.020870 1991

19 0.0208267 1992

18 0.0165397 1993

17 0.0137427 1994

16 0.0128061 1995

15 0.0106864 1996

14 0.00594293 1997 <– includes 1998 El Nino; no surprise here.

13 0.0128117 1998

12 0.0162600 1999

11 0.00967192 2000

10 0.00263393 2001 <– spike noted above; coincidentally about 10 years ago

9 0.00539484 2002

8 0.00774946 2003

7 0.0129574 2004

6 0.0143876 2005

5 0.032438 2006

4 0.0863044 2007 )

3 0.0517236 2008

average 0.0196526 (how many people know this is meaningless, and why?)

Trend for full (from 1978.92 to 2011.5) UAH dataset is 0.01367

Personally, I’m too lazy to go through month by month in start and end dates, to find how many pairs give an (insignificant) negative trend.

Why did I use UAH? – it’s from skeptic Dr. Roy Spencer.

Only one trouble with the post. The chart upon which it is based fails to show the 1998 temperature spike, which as all recorded sources show, was still the highest observed

[BUZZZZZZZZZZZZZZZZZZZZZZZZZZZZZZ!!! Wrong.]

(except perhaps for 1936).

[BUZZZZZZZZZZZZZZZZZZZZZZZZZZZZZZ!!! Wrong. Not even close. This is GLOBAL temperature, not U.S. temperature (and the U.S. is only about 2% of the globe).]

Hence I assume that WoodforTrees had alrady smoothed it out of their records before you started your analysis.

[BUZZZZZZZZZZZZZZZZZZZZZZZZZZZZZZ!!! Wrong.]

When working on pre-smoothed data it isnot possible to exclude noise, be it white or red, with any degree of certainty. Be that as it may, I do not think that ten or even fifteen years of static or even falling temperatures would suffice to prove that the long term warming trend which started before the industrial revolution has yet ended.

[Response: Clearly you’re spewing at this point — but do you have to be so weak?]

“Agnostic” sounds like a True Believer of the ideological variety…

because “Agnostic” clearly rejects science …