A reader recently asked:

T, from my mechanical engineering world we have strict rules on sampling rates vs. signal frequency rates. Ie you cannot reliably measure a 60hz ac sine wave with a 5hz analog sampling device. The result ends up being strange results that don’t show spikes well and also might not show averages well either. Can you help me understand how 120 year sampling proxies can resolve relatively high frequency temperature spikes?

This objection comes up so often from those who are accustomed to data which are evenly sampled in the time domain, and the misconception is so firmly imprinted on so many people, that it’s worth illustrating how uneven time sampling overcomes such limitations.

Let’s start with a signal consisting of a 60 Hz sine wave of amplitude 1. We’ll even add noise with standard deviation equal to 1. We’ll measure it at a sampling rate well below the signal frequency — but we won’t use even time sampling. Instead, I’ll let the time differences between samples equal 0.2 plus uniform random noise. Hence the maximum possible sampling rate will be 1/0.2 = 5 Hz, and in fact for my sample the maximum sample rate is 4.8 Hz and the mean sampling rate is a paltry 1.42 Hz. By conventional wisdom among engineers, this is nowhere near enough properly to characterize a signal at 60 Hz.

Here’s the data:

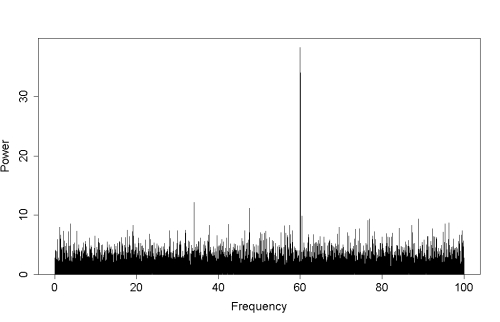

Here’s the discrete Fourier transform of the data:

The signal frequency (at 60 Hz) is plainly evident. Its signal power doesn’t significantly leak to other frequencies. There’s really no ambiguity about it, despite the fact that its frequency is much higher than the maximum sampling rate.

In fact a correct analysis reveals that the signal has frequency 60.00006 +/- 0.00075 Hz, amplitude 1.09 +/- 0.21.

This is not due to the use of a uniform distribution for the sampling time intervals. It’s a much more general property of uneven time sampling — just about any time sampling other than regular will reveal the signal as well.

Astronomy is plagued by sampling problems. We just don’t get to observe when we would like to. Most targets can’t be seen during the day, or when the sun or moon is too close, or when the weather doesn’t cooperate. And for professionals rather than amateurs, competition for telescope time at major observatories is fierce (which is a distinct advantage for amateur astronomers).

That’s why astronomers are used to irregular time sampling. It has some disadvantages, but it also has advantages such as the ability to search frequency space far far beyond the “Nyquist frequency” or, frankly, any frequency limit you care to define. In my opinion, in all but very specific circumstances the advantages of uneven time sampling outweight the disadvantages, by a lot. Uneven time sampling is sometimes thought to be a bane for data analysis, but it’s far more likely to be a boon.

OTOH, isn’t it true that if you had a very narrow spike, and none of it happened to get sampled by the particular set of uneven widely spaced sampling points, that the samples wouldn’t be able to reconstruct that spike?

Tom … OTOH, isn’t that a bit like a child asserting that there may be a bogey man hiding under her bed? It’s just that every time she looks the bogey man isn’t there.

And what would be the physics behind the production of such an extremely brief spike? Perhaps, conceivably, a meteorite hitting a large deposit of sub-sea methane clathrates might produce one.

So, OK, let me concede, for the sake of consideration, that it may be just conceivable that such an event happened that wasn’t picked up by the temperature proxies, nor, somehow, by any other signature in the geologically very young sediments from that time.

But what would that tell us about our present predicament? Would we feel reassured if indeed evidence were found that a sudden spike in global average temperatures had occurred, or could have occurred, in the otherwise gentle Holocene?

I think the answer to that question is similar to what the child would have concluded, if on looking she had actually found the stuff of her nightmares lurking beneath her bed.

Unfortunately methods for evenly spaced data are much more readily available, and things can get really messy if people try interpolating unevenly sampled data and then re-sampling with regular spacing.

Wow.

Thank you.

How about extending your signal analysis to a wider range? What it will show at 120Hz? or 180Hz? or 60kHz?

Tamino- I remember you fascinating posts on aliasing and astronomical observations- those would be some nice links for this post.

Perhaps you would care to enlighten us as to how, precisely, the transform in the second figure was computed? Or, better, provide a reference to these techniques that are apparently so well known in astronomy, so that we may enlighten ourselves independently?

It would certainly be interesting to understand “how uneven time sampling overcomes such limitations” but without further exposition it might as well be magic.

Read these:

https://tamino.wordpress.com/?s=aliasing

The R code for the date compensated discrete transform that is used here can be found in the R scripts that comes with the book “Analyzing Light Curves: A Practical Guide” and can be found here:

http://www.aavso.org/software-directory

The paper developing the method is here:

http://articles.adsabs.harvard.edu/full/1981AJ…..86..619F

With the R code provided in the link above it is quite easy to replicate the example (note that the dcdft takes quite long time to compute).

[Response: Quite. But this was calculated by the simple DFT (discrete Fourier transform). Both will do the job.]

I thought that simple DFT required evenly spaced sampling – that is what Wikipedia says. And since the example has uneven sampling, simple DFT cannot be used here.

What am I missing?

[Response: One can still define the DFT as proportional to

That’s what I had been led to believe.

but it’s far more likely to be a boon.

Wonderful lesson.

@rufus A direct Fourier transform is used. This is a very classical tool. However, normally people used the FFT, which is much faster. FFT works with evenly spaced sampling that are multiple of lower number factor. The preferred form in 2^n. I note that astronomer often use the Lomb periodogram because it is easy to calculate the probably that a signal is produced by a random fluctuation of noise.

One additional factor is that a proxy record with 120 yr resolution is likely to be effectively smoothed/averaged in some way over that interval. This process also greatly reduces aliasing, particularly if the smoothing kernel and/or sampling rate are variable.

Tamino – thank you for an excellent and eye-opening post. As an engineer I can remember how the Nyquist Criterion was presented to us in class as being on a par with the laws of thermodynamics. And how we must never, never, never sample a signal at less than twice the maximum frequency. We never covered uneven time sampling, or if we did I must have slept through that class.

Astronomers rock! — even “folk-singer” astronomers, apparently.

Guess that’s why they get immortalized in cartoons.

Some of us are jealous.

PhillipS, your experience is an aberration…it is standard, in courses where this comes up, to say something like “on an equispaced grid you need at least 2 points per wavelength to resolve a sinusoid” or “on an equispaced grid the highest wavenumber that can be resolved is pi/h where h is the grid spacing.”

I was taught the same as PhilipS. This post has been very enlightening, and quite surprising. I am glad I don’t have to do stats for a living as it always feels like a subject far too easy to screw up in.

Randomization is freakishly powerful. I wonder what can be said about non-random non-uniform sampling (if there’s anything interesting to say).

[Response: I don’t know much about that, but — I have seen a suggestion that choosing observation times based on using the Fibonnaci ratio will give better results than randomizing. I’m skeptical (which doesn’t mean I believe it to be wrong).

The thing about most astronomical time series is that we don’t get to choose the observation times, nature and observatory scheduling choose for us.]

Astronomer Rick White talks about what he refers to as “Golden sampling” (which involves the Fibonnaci sequence)here

It would be interesting to see your take on this, Tamino.

Thanks for the link; I will note it for reading when I’m back from my travels.

To explain my thinking: In computer science terms, there’s the concept of an adversary who gets to know our sampling schedule and can design the most devious function possible that will fool us; you allude to this above. Randomization can often completely disarm an adversary.

However, nature is not a conscious adversary (nor does nature try to help you), so often you discover better-than-expected performance. We typically invoke “nature” or somesuch nonsense to claim there’s a reason a technique works better in practice than what we can prove it should achieve. This is our version of archaeology’s “ceremonial purpose” cop-out, so for important questions we like to better analyze what is actually going on. Which is what your post is doing.

My question is my computer scientist reflexes wondering what’s the key bit: randomization or non-uniformity.

I look forward to reading the links to learn more; thanks!

I was taught the Sampling Theorem, its proof, and the Nyquist criteria for evenly sampled time series 20+ years ago and I teach the very same to engineers and physical oceanographers still. A single, well-constructed example (as Tamino does above) does NOT constitute proof that we can ignore the Sampling Theorem. To the best of my knowledge there is no proof that uneven sampling guarantees signal recovery as demonstrated in the above example. It may work some times and it may fail at others. It’s success and failure will become apparent only, if one knows signal and noise ahead of time.

I suspect (meaning I do not know for sure), that success depends critically on the sampling to be (a) random and (b) the time series to be long enough. I suspect, that for uneven sampling to work, the Nyquist criteria must be roughly satisfied for a sub-segment of the data to provide stability for the other segments when the Nyquist criteria is roughly violated. For randomly sampled data of an infinitely long time series, this is always guaranteed. I am not so sure this is true for a finite record length.

The problem with uneven sampling is, I think, that (a) we do we do not know how “random” the sampling is, (b) we do not know how long we have to sample to recover signals from unevenly sampled records, (c) we have no theory to tell us the uncertainty of our parameter estimates for the general case when neither signal nor noise are known a priori.

[Response: I quite understand your skepticism, it’s very natural. But you’re mistaken.

I’m fortunate enough to have played a meaningful role in the development of the theory of Fourier analysis of unevenly sampled time series over the last several decades (an effort which, as far as I know, has been spearheaded by the astronomical community). This result is absolutely robust in the fullest sense of the word.

Variable stars often show very short periods but are sampled only at long time intervals, yet time and time again we are able to discern their periodic fluctuations, and model their variations with great precision, due to the uneven time sampling. This has not required advance knowledge of the signal or the noise. Right now I’m working on a project on RR Lyr variables, and just this morning I was studying in detail a star (observed by OGLE, the Optical Gravitational Lensing Experiment) with period 0.325 days but for which the mean time between observations is 10.5 days and none of the observations are closer in time than a whole day. We have data for thousands of such variables, all of them have periods much less than a day and all exhibit similar time sampling, but we are able to quantify their oscillations in every case.

The fact is that for uneven sampling to make signal identification possible under these circumstances is the rule rather than the exception.

And there most certainly is theory to tell us the uncertainty of our parameter estimates for the general case when neither signal nor noise are known a priori. I’ve played a small part in its development.]

Ok, I take this as fun challenge to try it out on some of my data, both the evenly spaced (ocean temperature, current, and pressures) and the randomly sampled (optical satellite imagery) kinds. I got your 1995 and 1996 publications as well as the 2003 Fortran codes. Let the new fun learning continue … wherever it may lead, perhaps I will have to revise my course as well. Thank you for posting and distributing these ideas beyond astronomy here ;-)

I think your initial point about sampling is somewhat inaccurate and does not accurately describe the nyquist criterion which is you need to sample at 2x the bandwidth of the signal. This same criterion is accurate for both uniform sampling and non-uniform sampling. Non uniform sampling will also work but will require a different set of interpolation functions for recreating the data.

For the case of a sine wave the bandwidth of the signal is actually 0 so any rate with the exception of a multiple of the frequency will work with the exception that you will potentially see an alias of the signal. This is actually a common practice in digital communications which utilize IF sampling which effectively down converts data by sub-sampling it.

[Response: I think you completely misunderstand both the Nyquist criterion and this post.]

I am a practicing signal processing and communication systems engineer with multiple patents related to sampling. I have also taken numerous courses on this topic including a complete graduate level course focused on advanced sampling theory with heavy emphasis on non-uniform sampling. I completely understand the sampling theory as well as this post. Using non-uniform sampling will solve this problem as would sampling at 7MHz or any other number than a direct multiple of the carrier frequency. The technique is shown below :

http://en.wikipedia.org/wiki/Undersampling

The issue with non-uniform sampling is that instead of being able to use Sinc interpolation to recreate the original signal you need to create a family of interpolation functions based on the position of the sampling which is somewhat processing intensive as well as complicated. I prefer using a different frequency

[Response: I’m among those who helped develop the theoretical understanding of Fourier analysis of unevenly sampled time series. So perhaps you’ll understand that your credentials don’t impress me.

Your additional comments reinforce my opinion that you have utterly misunderstood. No, I don’t particularly care to argue about it with you.]

What is (if any) the relationship between irregular sampling as discussed here and compressive sensing, where very undersampled data is used to reconstruct an information limited (sparse) signal?

Nyquist sampling allows reconstructing every bit of the signal under the limit, while if the signal consists of a limited set of frequencies (thus the sparsity) the under-sampled data can be used in the compressive sensing framework to constrain the reconstruction and reconstruct far above Nyquist.

Or is it more appropriate to consider this irregular sampling of a repeated signal simply a stretched version of a high density sampling of the short period signal? With each cycle of the repeated signal sampled in only a few locations, but the irregular repeats ending up as a much higher density dataset sampling each portion of the signal?

This is a pretty old topic. I took the course 20 years ago from a book that was older than that. The Wikipedia article says it was first analyzed in 1967.

http://en.wikipedia.org/wiki/Nonuniform_sampling

The key point though is that your average sampling rate must be greater than the nyquist rate to properly recreate the signal. This applies to non-uniform sampling as well. In fact, non-uniform sampling does have some lower performance relative to noise and other effects. You don’t even have to directly sample the signal. Derivatives and other observations can also be used with worse noise performance.

[Response: I guess I’ll do another post on the topic. Stay tuned.]

Tamino, this is a great post. I like Part II as well, but I need to mull over that one a bit longer.

The Earth’s climate system certainly has periodic elements, but it is not designed via information theory to generate signals for reliable transmission of information across a narrow noisy channel. All this invocation of Nyquist seems at best a serious lack of understanding. I’d suggest that 1) has little relevance to 2) and especially 3):

1) design for reliable Information transmission systems

2) Analysis of variable stars, where periodicities exist, but observations are constrained as Tamino says

3) Marcott, et al analysis of 73 geographically-distributed records, whose sampling dates are fuzzy, but many of which are strongly constraned by physics to be reasonably correlated over century-scale. They also are constrained by higher-resolution proxiues (like ice-cores) that simply disallow some possibilities. In all this, nobody has suggested physics that allow a modern-style temperature rise to hide from all the records. They weren’t trying to extract high-frequency periodic signals.

Hopefully. tamino’s forhcoming post wil lget more people to understand.

====

As a once-upon-a-time Bell Labs engineer, who’d first read of Nyquist in high school, in Ch.2 of (Bell Labs DIrector) J. R. Pierce’s “Symbols, Signals and Noise”(1962), and having worked for a while in the same building as had Nyquist (and Shannon) decades before, and where Tukey still worked, While not among my specialities, most technical people had to know the basics and it is irksome to see such a misapplication of information theory.

Rather than red herrings, the interesting science/statistics question remains the usual one of further bounding uncertainty of the temperature path, both globally and when posisble regionally. I.e., the evidence is very strong that nothing like the post-I.R. global rise has escaped notice, but the the question is the size and nature of jiggles that were possible, including cases where global temperature didn’t change much, but ocean state-changes caused interesting regional variations.

I must be missing something regarding this as this post was titled “Sampling Rate” and directly discusses sampling a waveform. Nyquist rate is clearly applicable to sampling theory and is directly mentioned in this post whereas the topics you mentioned are not. I frankly know nothing about those topics and won’t comment on them.

Information theory is much broader topic than I think you may realize. It defines a theoretical framework related to the information content of a signal. I would agree that the general sampling theory is not directly applicable to signal with known characteristics, but information theory can actually be used to define the “information” for these signals.

Unfortunately I never worked in the same building as the ghost of Shannon so can’t claim to be an expert, although I did get a graduate degree in this area so I guess that might count for something.

Tamino, I kind of replicated this analysis on a spreadsheet and was able to get this same 60 Hz peak. I’m not sure whether I did the math correctly for the DFT (I used only the real portion of e^(ix) ie. cosx). I did find that when I shift the phase of the underlying 60Hz signal before doing DFT that I get anywhere from a very strong 60Hz peak to nothing at all. Am I just doing something wrong there or does the phase matter applying the DFT?

[Response: The phase matters.]

Tamino, I know I’m not saying this the right way, but it seems that the ‘bandwidth’ of the shift in your sample time is what gives you the ability to detect the bandwidth in the signal. For example, if you do the same analysis as above, but you only allow the sample time to shift in 40Hz increments then you actually can’t recover the clean 60Hz signal. Correct?

[Response: No, you’d still find the signal cleanly. The amazing power of irregular sampling to reveal signals is a bit hard to appreciate until you’ve been doing it for 20 years.]

Wow, that’s surprising. That seems to suggest that the Nyquist theorem doesn’t apply at all. Because what that says is that I could sample a 60Hz signal at a regular 40Hz sample rate and then so long as we randomly discard data points we would then be able to recover the 60 Hz signal.

[Response: Perhaps I misinterpreted what you suggested earlier. If you sample at 40Hz then randomly discard data points, all your time spacings are still integer multiples of 25 msec so they’re all in rational-number ratios to each other. So the time sampling is far from random.]

> irregular sampling to reveal signals

It’s how our senses work, isn’t it?

This post has gotten way too serious, so…

“Irregular Random Sampling”

— by Horatio Algeranon

Irregular, random sampling

Works when we are dating

Uncovers the embedded signal

Especially when we’re mating.

Sorry Hank, that wasn’t really intended as a response to your comment.

Your observation is actually a very good one, IMHO.

Your reference to humans just set off a bell (and Horatio’s mind works in strange ways, in case you had not noticed

Got it. Thanks Tamino.

” Denyquest Trampling Theorem”

— by Horatio Algeranon

The frequency of science

Is greater than Denyquest

Which means that sci-reliance

Ain’t easily by lie-dissed