We’ve already examined the big picture of global temperature according to the recent reconstruction for the holocene (in particular the last 11,300 years) from Marcott et al. We also took a close look at the recent uptick in temperature. Now let’s see about some of the regional differences indicated by these data.

In their supplemental materials, Marcott et al. included the reconstructions restricted to three very wide latitude bands: the northern extratropics (from 30N latitude to the N.Pole), the southern extratropics (from 30S latitude to the S.Pole), and the equatorial band (from 30S to 30N latitude). These reconstructions are computed using their “Standard 5×5” method. But we noted that they may suffer from a difficulty, that as time marches forward, the drop-out of more and more proxies can lead to spurious jumps in the average temperature. This is especially difficult for the most recent time period, because that’s when there are the fewest proxies remaining (so things are less accurate anyway) and there’s more proxy drop-out.

To help with this problem we applied a differencing method rather than a straightforward average. Let’s apply that method to the latitude bands and compare the results with the Marcott et al. standard 5×5 result. We’ll also apply it to a limited sub-region of the northern extratropics which I’ll call the “near north Atlantic” region, covering latitudes 30N and above, longitudes from 90W to 30E.

The Marcott result for the northern extratropics shows two questionable features recently, a pronounced drop followed by a pronounced rise:

The difference method shows neither:

Comparing the two reveals that the recent drop-rise is their main difference (other than the fact that the Marcott result is strongly smoothed due to their Monte Carlo procedure):

The drop defies belief, being more than a full deg.C, and the rise at nearly 2 deg.C is even less plausible:

I conclude that the drop-rise is an artifact of proxy drop-out, but the agreement between the methods for most of the time span argues for correctness during earlier times.

The interesting and useful result is that over the course of the holocene, the northern extraropics shows so much more temperature change than the globe as a whole. Whereas the global temperature covers a range of about 0.7 deg.C, the northern region changes by more than twice that, a bit over 1.5 deg.C. This suggests that perhaps the bulk of global temperature change over the last 11,300 years has been in the northern hemisphere extratropics.

Marcott et al.’s result for the equatorial band actually shows a slight warming over the early holocene, with another large uptick at the very end:

The differencing method shows much less uptick:

Direct comparison illustrates their good agreement except for the uptick at the end:

The southern hemisphere extratropics according to Marcott et al. shows cooling over the early holocene, with yet another large uptick at the end:

The differencing methods shows about the same thing:

Comparison reveals their general agreement, and that the ending uptick by the differencing method is only slightly less than that of the Marcott “standard 5×5” method:

The recent sizable uptick in the southern extratropics is not an artifact of proxy drop-out. However, this region has fewer proxies than the others, and by the time one gets anywhere near the 20th century fewer than 10 proxies remain. This doesn’t entirely invalidate the recent result, but does call it into question. Furthermore, the most recent southern uptick is larger than indicated by instrumental data. For these reasons I regard the recent southern uptick as suggestive, but far from reliable.

We can take the differencing-method results for the three latitude bands and combine them into an area-weighted average for the entire globe:

Then ending uptick is larger than it was in the difference-method estimate simply using all the proxies, as was done in the last post. This is because the uptick is strongest in the southern extratropics which have fewer proxies and are therefore underrepresented in a simple averaging method. Area-weighting compensates for this and gives the southern uptick the weight that its geographic area calls for. But again, the recent uptick is based on far fewer proxies — especially in the south — so I wouldn’t put too much stock in it.

For me, the truly fascinating aspect of this is the strong differences between geographic regions. We can see this most clearly by showing the latitude bands on one graph:

Even on this reduced scale the most important conclusions are apparent. Most of the temperature change throughout the holocene has indeed been in the northern extratropics, with slow and steady cooling the pattern since about 5000 BC. The southern extratropics has cooled early, and by much less than the northern extratropics. The equatorial band showed a slight warming up to about 3000 BC.

As Marcott et al. point out, this pattern of polar cooling and slight equatorial warming is consistent with the change in earth’s obliquity (the tilt of its axis) over time. Obliquity has reduced slightly since about 9000 years ago, which tends to bring less sunlight to polar regions but slightly more to the equator. Its effect has been amplified in the northern rather than southern extratropics, perhaps due to that region’s greater sensitivity to ice and snow albedo because of the much greater amount of snow in the north due to the much greater amount of land in that hemisphere. Another factor amplifying the northern cooling over the southern may be changes in the Atlantic meridional overturning circulation (AMOC).

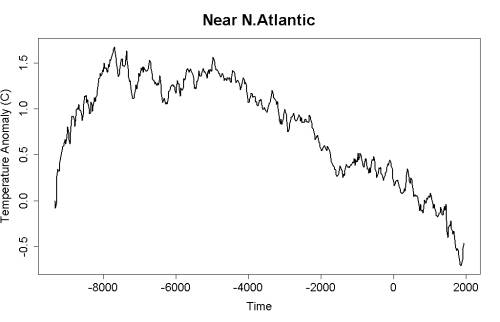

Marcott et al. also point out the even more exaggerated holocene cooling in the northern hemisphere near the Atlantic ocean. I isolated northern extratropical proxies which are not necessarily in or on the coast of, but at least near the Atlantic. Indeed it shows even more pronounced holocene cooling:

This region has cooled over 2 deg.C since its peak in the early holocene, and underscores that regional temperature exhibits much more volatility than global.

As fascinating as these results are, the Marcott et al. reconstruction is hardly the last word on the issue of holocene temperature. But as I said before, it’s a good step forward. I think its important message is correct, that the natural changes earth has undergone over the entirety of the holocene are not significantly larger, but are very significantly slower, than the changes wrought by humankind.

“This region has cooled over 2 deg.C since its peak in the early holocene, and underscores that regional temperature exhibits much more volatility than global.”

I think you might want to say more about this, as it might be misleading, in that the idea of volatility has to be tied to time-scale. By eyeball (not reliable of course), the extratropics seem to show similar volatility on century or multi-century scales, whereas the NH extratropics has more at milennial scale (likely due to the ice/snow albedo effect you not, at least in part.)

The 3 bands illustrate why careless readers of temperature spaghetti charts get all excited by differences that are caused, in part, by the fact that some reconstructions (like MBH99) were NH, and others were NH extratropics. (This was noted in PDF @ Strange Scholarship, p.131, 142, 158. It even got a Theme “F”, i.e., the confusion about reconstructions from geographical differences. IT makes perfect sense that NH extratropics (which covers 50% of the NH) differs from the NH, and the 3-band graph shows why.

Finally, it might be instructive to expand the 100AD-current part of those and put the CO2/CH4 records on the same scale and think about regions where snow-albedo effects are seen most strongly.

Excellent series of posts that substantively advance the discussion. I hope you’ll consider bundling these as a letter to Science.

Just think of where the ice caps are during an ice age, and where the melt is.

Actually the ‘differencing method’ is what Church and White use for tide-gauge data. It has some nice properties.

Can I get a bit of an explanation on what the differencing method is?

DIfferencing method: instead of averaging the data in each time period, you average the *differences* of each series datapoint compared to the previous datapoint in that time series. Then you apply the averaged difference to the previous period’s temperature. (The oldest datapoint has no previous, and must be used “raw”.)

This suppresses spurious results that can crop up due to dropouts of data series, because it doesn’t matter whether the dropped proxy is relatively cool or warm compared to the average, it’s only the changes you’re looking at.

Just looking at the examination for the Holocene as represented by these curves, you wonder where the climate would be naturally headed if it weren’t for the Anthroprogenic component. If the natural variation is considerably more lumbering than our recent instrumentally demonstrated up-tick, what are the ramifications of where we could have been. I guess that’s completely irrelevant, but perhaps a relief as well.

James Ross Island ice core shows warming for the past 600 years:

http://www.sciencepoles.org/news/news_detail/ice_core_provides_comprehensive_history_of_antarctic_peninsula_since_last_i/

so I suspect the uptick for the far south is, at least in part, real.

Salamano:

keep an eye out for Bill Ruddiman’s next book, “Earth Transformed” in the Fall. he has a lot of comparisons of CH4 and CO2 curves from past interglacials, with much discussion of proper alignment to get decent comparisons. See Ruddiman, Kutzbach, Vavrus (2011), Fig 2B (the CO2 tracks of the various interglacials) and

Fig 6 Hol0ocene vs average of 6 previous ones, with +/-1 SD., obviously a tiny sample, but what we have. An SD ~!5ppm.a and the pre-IR average is a bit less than 250ppm (as opposed to the 280ppm we got)., which is about 1.5SD higher. Our CO2 divergence starts ~6000-7000y BP.

So, the good news: from millenia ago, human actions slowed down the normal Milankovitch cooling, acting a bit like a thermostat.

The bad news: thermostate is now jammed on MAX and we soon to depart the narrow temperature range that covers the history of human civilization, more-or-less permanently in human-history scale.

You difference every proxy / tide gauge in time, i.e., take the difference between successive epochs; then, for every epoch separately, you sum up all these proxy values for that epoch; then, you cumulate (integrate) these sum values over time, back to an undifferenced time series.

While I presume temperature proxies are well understood, I wonder if any are affected by the total amount of sun they get. That is, I wonder if some proxies also incorporate changes to the earth’s obliquity directly.

Hm, how would that work? There are proxies — 14C and 10Be — that respond to solar activity, and are routinely assumed to be proxies for total solar irradiance/luminosity (but that may be too simple). But D and 18O, e.g., are not like that. Alkenone, I don’t know; one would think somebody laboratory tested this ;-)

It seems to be an active research question. Leduc et al. 2010 might be worth a read. It covers substantially the same proxies used by Marcott, with a particular focus on regional differences between alkenone and Mg/Ca proxies.

My reading of the paper is that the sensitivity of proxies, alkenone ones in particular, is dependent on limiting factors at any particular site. As insolation patterns change so can the relative influence of light as a limiting factor, which means proxy sensitivity can change over time. As I understand it, all the alkenone proxies used by Marcott have been calibrated for temperature under the assumption of constant proxy sensitivity when there may actually be a trend over the Holocene related to insolation.

GP,

So it is similar to the First Differences method NOAA used to use. What are the downsides of using first differences for an approach like this?

I don’t know that there is any downside. I’ve heard it claimed that cumulating small differences like this would lead to an unfavourable error propagation, but not sure I buy that.

Is it not equivalent to padding the NA regions with values equal to the last valid value? That seems OK for a while, but not for a very long stretch.

[Response: No, it’s not equivalent.]

This is just a guess, but it might be that the noise added by “differencing” doesn’t propagate much in the overall sum as long as it is randomly distributed.

The Arctic sea ice

do it justice Horatio

http://scholar.google.com.au/scholar?q=Eemian+Arctic+sea+ice+&btnG=&hl=en&as_sdt=0%2C5&as_ylo=2013

Aplogies Horatio, wrong link

The 3-band graph offers one more more reason to doubt the existence of a hidden global century-scale uptick comparable to modern, followed by large-enough downtick to cancel most of that, that have real physics and escape the other proxies.

The central band (30degS to 30degN) has 50% of the Earth’s area.

The part that varies most strongly over century-millennia scale is the part with the most land area, the 30degN-90degN.

I have argued before that any reconstruction graph include a clear legend for the region covered, AND the fraction of the Earth’s surface, which make more spaghetti graphs make more sense and help people give appropriate weight to the each band.

You note the northern latitudes have more temperature extremes than the data as a whole.

“Whereas the global temperature covers a range of about 0.7 deg.C, the northern region changes by more than twice that, a bit over 1.5 deg.C.”

Is this just continentality at work? The northern hemisphere has a much larger % of land than the earth as a whole, so it has more temperature extremes.

I believe so, yes. Especially the albedo effect part, with all that potential snow cover to add to the ice in Northern America and Eurasia.

D’oh! He’s right.

Yes, but it is worth thinking about the different {snow, ice}-albedo feedbacks, whether they are on land or water, and the relevant time-scales.

As far as I know, there are no such feedbacks in areas that are either covered with snow/ice year-round,or never have any snow/ice. So, neither Equator nor E. Antarctica are very relevant.

From slow to fast;

1) We have multi-millennial-scale Milankovitch-based ice-albedo feedback in NH on land, i.e., glaciers advance from Baffin Island, etc down to Kansas, but it takes a long time and covers a large area.

2) We have ice-albedo feedback in ocean and lakes, especially NH, which can certainly operate on much shorter timescales, even days.

3) We have snow-albedo feedback, which mostly matters where snow can happen, but does not always, i.e.,near the snow line. A big snow late in Winter can keep an area cooler. This can even interact with 2), i.e., if it snows on a lake, the water gets a little colder, but if the the lake freezes over, and then it snows, albedo(snow) is higher than albedo(ice), and it stays cold longer.

As usual, the Swiss take an interest.

Micro-scale effects can be observed by skiers.

Comments closed on “The Tick” so responding here.

“Which is it, Brian–McI ‘got it wrong,’ or ‘did nothing original?’ These two are not compatible.”

So Tamino holds incompatible positions. How is that my problem? That was part of my criticism of his claims.

keith

No – the Holocene Climatic Optimum resulted from precessional forcing (increased NH summer insolation) that waned ~5ka ago.

Yep — but continentality (plus albedo) modulated it.

…and actually the Milankovich forcing that really matters for year-round high-latitude temperatures is the one related to the Earth axis tilt. Climatological precession again just shifts the forcing around the annual cycle.

Obliquity (modulated by eccentricity) triggered the glacial termination that was underway by ~19ka. Precessional forcing warmed the NH during the Holocene Climatic Optimum.

I’m coming late to this, but in addition to continentality and precessional forcing, the Northern Extratropics includes the North Atlantic which is unusually variable in its own right.

picturing the various tip-and-wiggle factors:

https://www.google.com/search?q=insolation+precession+orbit+climate&source=lnms&tbm=isch&sa=X&ei=zbNQUenXE-j2iwKa6oGACg&ved=0CAoQ_AUoAQ&biw=1038&bih=1011

Yes, the first image found is WTF …

Illustrating once again why fora such as these are useful for those who really engage in trying to understand what’s going on (but who are not (all) pros.)

Hi,

why is Marcott uptick happening mainly in the Souterhn Hemisphere when CO2 emissions and instrumental temperature rise happened mainly in the Northern hemisphere ?

Thanks

Ben, there are fewer proxies for the southern hemisphere to begin with, so it is likely that dropout has a bigger effect there. This has nothing to do with the instrumental rise or ghg.

Speaking of value and conversations, this (otherwise) OT piece caught my eye on Sciencedaily.com:

http://www.sciencedaily.com/releases/2013/03/130325160520.htm

I hate the title, which is “Predictions of Climate Impacts On Fisheries Can Be a Mirage”–they show that predictions of other variables, like fishing intensity can create ‘mirages’, too.

But this bit sounds very interesting:

Correlation does not imply causation. Physics does.

“CO2 Modeling”

— by Horatio Algeranon

Global warming recently paused

Which CO2 has clearly caused

Changing its infrared absorption

To model skeptical contorption.

“e.e he looked so.. fat. and ugly. and every contorption was off..”

You know that, and I know that–probably even sciencedaily knows that!

Despite that apparently denier-baiting title, it wasn’t a piece saying that climate didn’t affect fisheries. It said that to obtain sensible results, single-factor studies didn’t work. What I thought interesting was this allegedly novel analytic methodology, ‘convergent cross-mapping.’ On the face of it, it sounds as though it could be useful for other vaguely attributional uses–some of which might be very interesting in the present context.

“Skeptical Contorption”

— by Horatio Algeranon

Tony Watts is quite contorped

His “analysis” is bent and warped

Twisted into a Möbius loop

And endless train of puppy poop

Perhaps it is possible to use this technique to prove who who slapped first?

Not so interesting…

No, but it sure is funny.

Naturally brings to mind “Good Lord, Monckton, why are you slapping a monkey?”, which so many of us have wondered about for so long.

Ah, so THAT’S what he’s been doing…

Nit: 2nd graph is labeled 30N to 60N. That must be 30N to 90N.

Thanks Tamino. This has been a great series of posts, particularly in light of the off-shift response to Marcott et. al. in the denialosphere. And by the way: http://nsidc.org/arcticseaicenews/ 6th lowest maximimum on record….

re Sugihara et al, and “convergent cross mapping” somewhat better info from http://www.constantinealexander.net/2013/03/26/index.html

——-excerpt follows——-

… For example, based on data from the Scripps Institution of Oceanography Pier, studies in the 1990s showed that higher temperatures are beneficial for sardine production. By 2010 new studies proved that the temperature correlation was instead a misleading, or “mirage,” determination.

“Mirages are associations among variables that spontaneously come and go or even switch sign, positive or negative,” said Sugihara. “Ecosystems are particularly perverse on this issue. The problem is that this kind of system is prone to producing mirages and conceptual sand traps, continually causing us to rethink relationships we thought we understood.”

By contrast, convergent cross mapping avoids the mirage issue by seeking evidence from dynamic linkages between factors, rather than one-to-one statistical correlations….

—-end excerpt——-

Sounds like the same story as on science daily…

Another excerpt, this from

http://www.bloomweb.com/big-data-in-advertising-cause-and-effect/

—- excerpt follows—-

Sugihara and his colleagues developed their tool specifically for complex ecosystem analysis, yet its applications could have far-ranging implications across multiple areas of science. For example, “one could imagine using it with epidemiological data to see if different diseases interact with each other or have environmental causes,” said Sugihara.

Sugihara and other Scripps scientists are now applying this tool to study specific ocean phenomena, such as the seemingly unpredictable harmful algal blooms that occur in various coastal regions from time to time….

—-end excerpt —–

—–

Question for the statistically competent — does this method somehow say there are, say, a dozen factors, three of them big enough to worry about, and we can put a name on two of those and aren’t sure what the third one is but we can tell for sure there’s -a- big factor to identify (versus just lots of noise)? It -sounds- to this illiterate like principal component analysis, if so.

[Response: I don’t know enough about the method to give an opinion.

I would urge caution, because in my experience there are four most likely results of any revolutionary new mathematical method. From most to least likely, these are: 1. There’s a fundamental flaw the inventors didn’t anticipate; 2. It works for the given application but isn’t nearly as good a general method as they think; 3. Gauss already invented it in the early 1800s; 4. It’s as good as claimed.]

PS: I predict an industry attack on the notion of using “epidemiological data to see if different diseases … have environmental causes” if sol.

Industry’s been comfortable so far in the US because under US law (no “precautionary principle”) it takes demonstrated harm before any constraint can be put on the industry’s freedom to innovate and disperse new chemicals.

“No immediate and significant hazard to public health” is a familiar phrase from industry PR, as in “you can’t prove it’s a problem.”

One might have thought that we’d learn something from the ozone hole affair–this quote from RC commenter Chris Hogan has stuck with me:

One further quote from that “Big Data in Advertising” blog story on this:

—–Excerpt follows:

Sugihara says his new test can deal with two-way causality. What’s more, the test can ferret out various causal linkages in systems with several variables. CCM asks whether one variable predicts another, much like Granger causality. To deal with the two-way causality problem, each data set is put through mathematical transformations, creating a three-dimensional shape called a manifold. Points on one manifold may be used to predict points on the other, but not necessarily the other way round. That means causal relationships, of one direction or another, can be measured separately.

Sugihara tested his method on real-world case where the causal relationships have already been established, but the jury remains out on CCM. According to New Scientist magazine, several statisticians said CCM looks promising. “It will be worth studying in more detail,” says Steffen Lauritzen of the University of Oxford. Others are less keen. According to Judea Pearl, who studies causality at UCLA, the paper may be theoretically flawed. The starting point of any study is a hypothesis about why a causal relationship exists, he says. Sugihara’s test instead involves scouring data for links and then retrofitting a hypothesis to suit….”

Hank, thanks for following up on my casual question. It still sounds interesting, but we shall see…

If these results are reliable, could they be used to test the accuracy of climate models to reproduce the past variations due to astronomical changes? I think it would be an important step to convince people that the models can be trusted. After all these results are within the same order of magnitude of current observed variations and should provide a good benchmark of our capabilities of predicting the result of changes in forcing.

Jim Bouldin Says:

March 24, 2013 at 10:16

I’ve appreciated from day one what you try to do in these discussions Bart, but this kind of thing proves nothing really. I realize this was not written by you, but the point remains. There are gaping holes big enough to drive trains through in much of paleoclimatology and yet people still glom on to this stuff as if it actually means something important.

I never cease to be amazed how the online climate discussions move from fixation to fixation on particular studies, someone initiating and invariably a whole bunch of others then dutifully following, without even addressing the fundamental questions that make or break such studies, while the rest of the literature gets ignored.

Don’t people in this field ever get tired of this crap?

> If these results are reliable

science is never done (What? never? Well, hardly ever) and getting enough background in an area of study can leave the amateur more rather than less confused. I remember when I took plant physiology as an undergrad it was in years when the field had thought it had gotten a mature and clear idea of how water climbed tall trees, and people had been methodically experimenting with live trees, trying to knock out one way or another water could be going up.

Well, the year* I took the class we studied all the theories about how water could go up tall trees, and studied the last few years’ accumulation of papers finding that various notions weren’t right. And at the end — nobody had a good idea even what to try next, the damn water kept climbing the damn trees. Slices cut more than halfway through, alternating; heat; radiation; poisoning; I dunno what else. They tried everything.

Science gets like that — and stays interesting.

_______

* in the early part of the latter half of the last century of the past millenium

Well, I have no doubt that science is interesting – I was only wondering how well GCM could fit the past variations on millenary scale, or even been improved by a comparison with more data – after all, it’s the usual way to reduce the uncertainties.

Tamino, McI has accused you of plagiarism over at dot earth, and Revkin seems to buy the claim. You might want to contact him.

“Steve McIntyreToronto, Canada

Andy,

The ideas in Tamino’s post purporting to explain the Marcott uptick,https://tamino.wordpress.com/2013/03/22/the-tick/ which you praise as “illuminating”, was shamelessly plagiarized from the Climate Audit post How Marcott Upticks Arise. http://climateaudit.org/2013/03/15/how-marcottian-upticks-arise/

It’s annoying that you (and Real Climate) would link to the plagiarization and not to the original post.

April 1, 2013 at 9:25 a.m.RECOMMENDED8

Andy RevkinDot Earth blogger

I had no idea this was an issue until you commented here just now — which is one reason I blog. As David Weinberger has written, the room is indeed smarter than anyone in the room.

“

Sorry, posted this in the wrong thread, he’s accusing you of plagiarizing him regarding the uptick being caused by proxy dropouts, which was the subject of one of your other posts.

I keep thinking they’ve hit bottom over there, and they keep raising the ante. Whatever next?!

I don’t know if it is more helpful to correct the record, given the clubby and foreign (to DotEarth) nature of the attack, or to preserve a dignified silence.

However, dhogaza describes the problem exactly. Who touches pitch is defiled.

I don’t mean to say that DE doesn’t host a lot of claptrap, only that this particular variation is orchestrated at a pretty high level and mostly not by the usual suspects.